The power of play

A new collaboration between MIT.nano and NCSOFT, a video game development company based in South Korea, will seek to chart the future of how people interact with the world and each other via the latest science and technology for gaming.

The creation of the MIT.nano Immersion Lab Gaming Program, says Brian W. Anthony, the associate director of MIT.nano, arose out of a series of discussions with NCSOFT, a founding member of the MIT.nano industry consortium. “How do we apply the language of gaming to technology research and education?” Anthony asks. “How can we use its tools — and develop new ones — to connect different domains, give people better ways to collaborate, and improve how we communicate and interact?”

As part of the collaboration, NCSOFT will provide funding to acquire hardware and software tools to outfit the MIT.nano Immersion Lab, the facility’s research and collaboration space for investigations in artificial intelligence, virtual and augmented reality, artistic projects, and other explorations at the intersection of hard tech and human beings. The program, set to run for four years, will also offer annual seed grants to MIT faculty and researchers for projects in science, technology, and applications of:

- gaming in research and education;

- communication paradigms;

- human-level inference; and

- data analysis and visualization.

A mini-workshop to bring together MIT principle investigators and NCSOFT technology representatives will be held at MIT.nano on April 25.

Anthony, who is also faculty lead for the MechE Alliance Industry Immersion Projects and principal research scientist in the Department of Mechanical Engineering and the Institute for Medical Engineering and Science, says the collaboration will support projects that explore data collection for immersive environments, novel techniques for visualization, and new approaches to motion capture, sensors, and mixed-reality interfaces.

Collaborating with a gaming company, he says, comes with intriguing opportunities for new research and education paradigms. Specific topics the seed-grant program hopes to prompt include improved detection and reduction of dizziness associated with immersive headsets; automatic voice generation based on the appearance or emotional states of virtual characters; and reducing the cost and improving the accuracy of marker-less, video-based motion capture in open space.

Another area of interest, Anthony says, is the development of new tools and techniques for detecting and learning how personal gestures communicate intent. “I gesticulate a lot when I’m talking,” he says. “What does it mean, and how do we appropriately capture and model that?” And what if, he adds, researchers could apply what they learn to empower a doctor in a clinical setting? “Maybe the doctor wants to palpate an image of tissue, which includes measures of elasticity, to see how it deforms or slice it to see how it responds. Tools that can record and visualize these gestures and reactions could be tremendously powerful.”

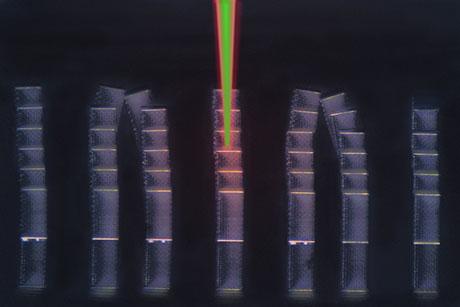

The collaboration’s work will involve the creation of software and the development of new hardware. The Immersion Lab Gaming Program’s leaders hope to spur a range of efforts to explore visual, acoustic, and haptic technologies. For example, Anthony says, it is possible to use sound waves that are outside the range of what is audible to humans for tactile purposes. Expressed with the right tools, these waves can actually be touched in mid-air.

The combination of hardware and software development was one of the key factors in NCSOFT’s decision to work with MIT and to become one of MIT.nano’s founding industry partners, Anthony says. And the third-floor Immersion Lab — specifically designed to facilitate immersive digital experiences — provides a flexible platform for the new program.

“Most of this building is about making or visualizing physical things at the nanometer scale,” Anthony adds. “But this space will be a magnet for people who do data-science research. We want to use the Immersion Lab to increase friction between the hardware and software folks — and I mean friction in a good way, like rubbing elbows — and to interact with the data coming from the building and to imagine the new hardware required to better interact with data, which can then be made in MIT.nano.”

MIT.nano has released the program’s first call for proposals. Applications are due May 25.