Robotics in the 21st Century

Building Robots for the People

The image that comes to mind when you hear Professor John Leonard describe his dream of developing a robot that is what he calls “a lifelong learner” is so cinematic it’s almost hard to believe:

“Maybe it operates for six or eight hours a day,” he begins. “It plugs itself in overnight, recharges its batteries, and ‘dreams’ about all the experiences it had that day. It does that same thing night after night, building models up over time so that it gradually gains a better understanding of the world and a better decision-making capability for taking the right actions.”

That something like this might be possible in our lifetime prompts us to ask ourselves if we’re not the ones who are dreaming.

It begs another question too: To what end? What place do advanced robotics have in the timeline of human progress? What do we really gain from these advancements? Is it simply a nerdy satisfaction that comes from knowing it can be done? Is it just a self-fulfillment of our science-fiction fantasies and fears?

Faculty in the MIT Department of Mechanical Engineering believe there is more to it than that. There is human progress to be made, and not just in the areas of first-world conveniences and national defense, although those possibilities exist too. But the potential for significantly improved human health and safety is significant – particularly in the field of assistive technologies for those suffering from paralysis and immobility, and in our ability to deal with or avoid emergencies, such as a threatening fire, a devastating nuclear disaster, a tragic car crash, or a dangerous police or military situation.

“I look at where robots can be really valuable,” says Associate Professor Sangbae Kim. “There are a lot of people looking for jobs, but we’re not interested in taking their jobs and replacing them with a robot. If you think seriously about where you can use a robot, where you really need a robot, it’s in environments where humans could not survive, such as where there have been nuclear disasters. There are many power plants in Europe that have had to shut their doors because people can’t get in to them, or where they need to dispose of waste but can’t because it is too dangerous for a human.”

In situations such as these, where robots can deliver aid that isn’t otherwise accessible, robots can be incredibly helpful. In a hot fire, a humanoid robot like the one Professor Kim is creating could walk into a building, find someone who is trapped, break down the door, pick them up, and carry them safely out. In a hostage situation or a police shootout, a robot could enter an uncertain circumstance, survey the scene, and report back to the police about how many people there are, whether they are carrying weapons, and so on, similar to what happened during the aftermath of the Boston Marathon bombing in 2013 when police found the suspects’ abandoned car and sent PackBots – developed by iRobot, a company co-founded by MechE alumna Helen Greiner – in for reconnaissance.

*****************

Photo by: Tony PulsoneAssociate Professor Sangbae Kim: Disaster Response and Rescue

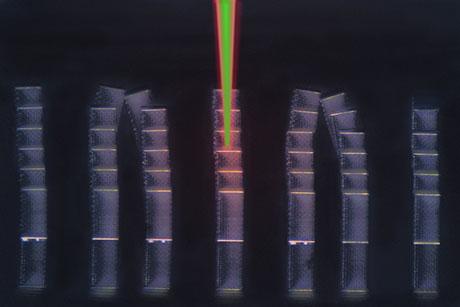

For many, Professor Kim’s robotic cheetah needs no introduction. The nature-inspired quadruped machine is as graceful and nimble as it is strong and powerful, like the cheetah itself. Built with electric motors – as opposed to hydraulic motors – and self-designed actuators, in collaboration with Professor Jeff Lang in MIT’s Electrical Engineering and Computer Science Department, the robotic cheetah can bear the impact of running for more than an hour at speeds up to 13 mph, can autonomously jump over obstacles and land smoothly, and is powered by an 8-kilogram battery that lasts for two hours.

But you may be surprised to hear that the cheetah is only half of the picture. The other half is HERMES, a quadruped humanoid robot that can stand up on two legs and use its other two limbs for hard mechanical work and object manipulation.

“If you look at all of the current robots in the world, very few of them can do anything that involves dynamic interaction with environments,” says Professor Kim. “Our cheetah is constantly interacting dynamically with the environment, but it is not doing any of the delicate position control tasks that manufacturing robots do. So I’m focused on bringing this dynamically interactive capability and infrastructure from the cheetah to the manipulation world. This will allow our robot to tackle unstructured and unexpected situations in disaster sites.”

HERMES and the cheetah will soon merge to become a life-saving disaster response robot. That unity will result in a robot that looks like a mix between a monkey and a human, and will be able to autonomously direct itself to a dangerous scene where it could, for example, use an ax to break down a door or find a trapped child, instead of sending a firefighter into a life-threatening situation. Or where it can walk into a nuclear disaster to inspect the situation and take emergency action.

Modern-day robots have the physical speed in motion to carry out such tasks – in fact, they’re even faster than humans, who, according to Professor Kim, take about 100 to 200 milliseconds to physically respond, whereas robots can respond in a millisecond or two and generate speed much higher than humans can. But what about the quick thinking that is required in emergency situations?

“If a door is jammed and the robot needs to break it down using an ax, there is the question of what strike pattern to use,” says Professor Kim. “It becomes a very complex task, and developing autonomous algorithms for such a variable, complex task can be extremely challenging at this point.”

To overcome this intelligence limitation in robots, Professor Kim is designing a system that incorporates a human operator in the loop. In a virtual reality-type scene, the human operator wears a sensor suit and views the situation through a camera installed on the robot, then gives the robot instructions by simply moving his or her own body in response to the robot’s surroundings and goals, like a “surrogate,” as Professor Kim calls it.

And here is where we meet the one major technical challenge standing between Professor Kim and a saved life: the question of balance – how an operator feels the balance of the robot. Professor Kim and his research group are working now on how to answer this missing piece of the puzzle by developing a force feedback system that informs the human operator about the robot’s balance, allowing the operator to “feel” whether it is going to fall or slip and then virtually correct its center of mass.

He estimates that within five years the technology will be ready for action, and within another five, the disaster bot – part cheetah, part human – will be properly tested and suited up with fireproof materials, ready to jump into the fray.

*****************

Professor John Leonard: Simultaneous Localization and Mapping (SLAM)

There are some feats, such as quick decision-making, that robots simply aren’t well suited for – and many technical questions have yet to be answered. Science-fiction movies and mainstream media might lead us to believe that we’re so close to full robot autonomy that we can reach out and touch it, but according to faculty members in MechE, we’re not as close as it may seem.

“Abilities such as object detection and recognition, interpreting the gestures of people, and human-robot interactions are still really challenging research questions,” says Professor John Leonard. “A fully self-driving car, able to drive autonomously in Boston at any time of year, is still quite a long time away, in my opinion, despite some of the predictions being made to the contrary.”

Professor John Leonard was one of the first researchers to work on the problem of simultaneous localization and mapping (SLAM). The question was how to deal with uncertainties in robotic navigation so that a robot can locate and navigate itself on a map that it’s still in the process of building.

SLAM asks three questions: How do you best represent the environment? What trajectory best fits the collected data? What are the constraints of the physical system?

Professor Leonard has been working on the most effective ways of answering these questions since his graduate student days at Oxford University. Back then, he says, the sensors to collect data were primitive and the ability to compute large amounts of data was limited. There was – and still is – a lot of uncertainty as a robot moves in space and time toward a future that is completely unknown to it – unknown terrain, unknown objects, unknown twists and turns.

He gives the example of a robot car moving through the world. “If you just count the wheel rotations and try to integrate the change in position, accounting for the changes in heading, there is noise in those measurements. And over time as you integrate that you get increased error. The robot becomes less sure of where it is relative to where it started.

“Also,” he continues, “when a robot measures the world, there are errors in those measurements. Some of the sensor readings are totally sporadic. There is ambiguity. So measurements are uncertain both in how the robot moves and its perception of the world.”

Even in places where the uncertainty is decreased, such as a location where the robot has already been – there is the issue of “dead reckoning error,” meaning that the map the robot develops as it moves is slightly off from its actual trajectory so that when the robot comes back to a known location, identified by the camera’s position, the map shows that it’s slightly adrift from its actual location. Without finding a way to close the loop, the robot becomes lost and can no longer progress in an accurate way.

Professor Leonard, along with collaborators in Ireland, has developed an advanced algorithm that can correct for drift to close loops in dense 3D maps.

In his earlier days when image-processing power was rather weak, Professor Leonard’s solution was focused on sparsity – the idea that less data was more. Instead of collecting entire sets of data, researchers gathered points intermittently, and filled in the gaps with estimations. But now, he says, thanks to video game console developers, GPUs (graphical processing units) are so advanced that, instead of settling for sparse data, he’s gathering data sets that are as dense as possible.

And it’s this improvement in technology – along with techniques to close the loop – that led Professor Leonard to realize that, in combination, the two could enable something that had never been done before in autonomous mapping: an expanded map that wasn’t restricted to a small area.

“If you can accrue the information from a big area,” he explains, “then when you come back to where you started, you can use all that information as a constraint—and propagate that back through your trajectory estimate with improved confidence in its accuracy.”

His current work is the integration of object recognition into SLAM. “If you can represent the world in terms of objects, you will get a more efficient and compact representation of all the raw data. It’s also a way to gain a more semantically meaningful means of presentation for potentially interacting with people. If you could train a system to recognize objects, then the robot could detect the objects and use them to map their locations, and even perhaps manipulate them and move them through the world.”

*****************

Professor Domitilla Del Vecchio: On-Board Vehicle Safety Systems

Considering, then, that humans are strong in areas such as intelligence where robots are not, and vice versa, it makes sense that several MechE faculty are focusing on ways to combine their best characteristics into one effective human-robot system. (MechE’s own Professor Emeritus Thomas Sheridan – who, in 1978, established an eight-level taxonomy of human-machine interactions that became the basis for understanding how people interact with products and complex systems – was a pioneer in this field.)

Associate Professor Domitilla Del Vecchio is creating on-board safety systems that provide semi-autonomous control for commercial vehicles, particularly in congested situations prone to crashes such as highway merges, rotaries, and four-way intersections. Her system is programmed to take control of the car as needed in order to achieve safety.

“Full autonomy on the road is probably not going to be realistic for the next five to 10 years,” she says. “We are developing a system that can monitor the driver, monitor the situation in the traffic, and intervene only when it is absolutely necessary.”

You’re probably familiar with a scene like this: A car that was waiting at a four-way intersection starts accelerating. Almost at the same time, another car starts moving toward the first, out of turn, trying to make a left. Before surrounding drivers can even consciously process what is happening, brakes screech and a loud crash pierces the air. Automobile pieces go flying.

Professor Del Vecchio is developing onboard safety controls to help prevent a situation just like that. Her group is designing a safety system that would anticipate this type of collision – before you do – and initiate automatic action on your behalf to prevent it. An extremely complex computation would go on behind the scenes to consider all the facts of the situation – placement of cars, speeds, and angles to determine the probability that the crash will take place.

But there’s a problem: The algorithms to do those computations are incredibly complex, and to do them online would take minutes, which of course you don’t have.

“So many of these algorithms that have been developed are beautiful,” says Professor Del Vecchio. “You can run them in simulation, and they work very well. But if you want to implement them on a real-time platform, it’s never going to work.”

Instead of developing algorithms that do the full computations, she has determined where approximations can be made in the algorithms to save time. Since there are certain monotonic aspects of a car’s behavior – break harder, and the speed decreases; increase the throttle, and the speed increases – she has been able to develop algorithms that scale, and calibrate them for a reasonable range of uncertainties, which can also be modeled from data.

“With approximations,” she says, “of course you lose something, so we are also able to quantify those losses and guarantee a certain success rate, a probabilistic safety. In return, we gain quick autonomous decision-making in polynomial time.”

Her research group is also working to find ways of decreasing the uncertainty. If communication is possible from vehicle to vehicle, then a considerable amount of uncertainty is removed and vehicles can cooperate with each other. Although there is a delay that usually renders the information old by the time it arrives, she has developed an algorithm that uses the old information and makes a useful prediction about the present based on it.

Professor Del Vecchio’s group has been collaborating with Toyota since 2008 and with Chrysler since 2013. They have tested their safety systems on full-scale vehicles in realistic scenarios and have found them to be successful. Their ultimate goal is to enable vehicles to become smart systems that optimally interact with both drivers and the environment.

*****************

Professor Harry H. Asada: Wearable Robotics

Since Professor Emeritus Sheridan discovered decades ago that humans aren’t particularly good at monitoring otherwise autonomous robots, faculty members like Professor Harry Asada want to take the interactions even further. He has a long-term vision of creating a wearable robot that is so well attuned to its operator through advanced sensing and interpretation that it becomes an extension of their mind and body.

Professor Asada and his research group are focused on developing robotic fingers, arms, and legs to help stroke victims or persons with a physical handicap to compensate for lost functionality, or to enhance human capabilities. It may sound a bit supernatural, but for Professor Asada, it’s anything but. His goal is to build wearable robots that can be perceived as an extension of human limbs. To achieve this goal, his robots must work naturally, through implicit direction from the user, rather than through explicit commands.

For his work on robotic fingers, his team started with neuromotor control theory.

“There are 600 muscles and 200 degrees of freedom in the human body,” says Professor Asada. “Our brain does not control all 600 muscles individually. When it sends a command for movement, each command coordinates a group of muscles working together. When you grasp something, the brain is telling the muscles to move according to certain patterns.”

Applying that theory to their development of wearable extra fingers, they found that there are three main patterns of motion that, when used in various combinations, make up 95% of common hand movements. The first is the opening and closing of the fingers in sync with each other; the second is an out-of-phase movement; and the third is a twist or rotation of the fingers.

Next, they conducted studies with patients and doctors at Spaulding Rehabilitation Hospital and analyzed users’ placement and orientation of their hands while they performed approximately 100 common daily chores, then asked them where additional fingers would be most useful.

Outfitting their test subjects with sensor gloves to detect various combinations of bending joints, Professor Asada’s team was able to design a set of control algorithms based on the resulting data and the known hand-motion patterns, and ultimately program robotic fingers to be prepared for a variety of tasks.

On their functioning hand, users wear a glove with embedded sensors that detect their movements. Based on those movements, Professor Asada’s robotic hand, which adds two extra fingers (and six joints), can interpret that data as a certain task and coordinate its two fingers to complement the healthy hand. Professor Asada has also embedded force sensors in the robotic fingers to determine if a stable grasp has been achieved.

This gets to the core of Professor Asada’s research: a robot that can sense which task a user is about to tackle based on their posture, and intuitively and naturally complement it in real time. His ultimate goal is for users of his wearable robotics to forget that they’re wearing them altogether – the robots would be so intuitive and self-sustaining that they would feel like a natural part of the body.

It’s this same principle that drives his other research in wearable robotics as well. His group is also developing extra arms that could be used in manufacturing scenarios where the labor is strenuous, such as work that is consistently done with ones’ arms over their shoulders, or work that would normally require two people. He’s using similar sensors to detect the placement and movement of shoulders, so that the robot arms can determine the task – for example, soldering – and support it; he’s even pursuing the use of a camera

that can scan eye movements to determine where a person is looking.

*****************

Professor Kamal Youcef-Toumi: Advanced Safety and Intelligence

How many times have you wished aloud to an empty room for some help with a task – to assist with a car repair, clean up the house before a dinner party, or contribute to an assembly line? You probably said it in vain, resigned to the fact that it would never happen in your lifetime, but Professor Kamal Youcef-Toumi has something that will change your mind.

Like Professor Asada, Professor Kamal Youcef-Toumi is developing robots that can sense a gesture or expression from a human collaborator and understand the subtle complexities in meaning – and then “instinctively” understand how best to proceed.

Along with his research group, he is working to implement high-level sensing capabilities of robots that can aid in industrial fields such as manufacturing. One of these capabilities is, well, let’s call it “sensitivity.” He is developing robots that will quite literally “sense” subtle cues from a human who is working by its side, and then through a complex web of algorithms, decipher those cues and adapt to them. To say nothing of the fact that some humans have trouble sensing implicit cues through body language or hand gestures, the thought of a robot picking up on such non-verbal hints is impressive indeed.

“The robot is a machine, so we have to give it information,” says Professor Youcef-Toumi, “but we want it to also have some intelligence of its own so that it’s building on the information we’ve given it, and we don’t have to tell it every little step.

“Imagine we are sitting in a restaurant,” he continues. “We order tea. We don’t have to say to the server, ‘Please bring two cups, two spoons, two napkins, and two saucers.’ All of that is implied. This is similar. The robot starts with some information and uses new information to keep building on that.”

In Professor Youcef-Toumi’s demonstrations, his research group will present the concept using well defined tasks and environments, but his final robot will be able to make decisions about new interactions and environments in real time. He says that the robot – which is not being designed as a humanoid robot at this point – will sense tools or objects, their placement, and human gestures and motion, among other things – to paint a picture for itself about what it is expected to do.

“In the end,” says Professor Youcef-Toumi, “we want the robots and humans working together as if the robot is another human with you, complementing your work, even when you leave the scene and come back. It should adjust to resume complementing you and proceed in that way.”

Professor Youcef-Toumi has also been working on a robot that can swim through water or oil in a pipe system, or crawl through one filled with natural gas. The robot his group has designed leverages pressure changes to detect particularly small leaks (down to approximately 1mm) in a pipe made of any material, carrying any product. The robot can pinpoint a tiny leak that a current system would miss, determine exactly at what angle and location on the pipe’s circumference it exists, and navigate there on its own with knowledge of where it is in context of the whole system. All of this data is collected, recorded, and transmitted wirelessly via relays to a control center.

“Small leaks that go undetected for a long time can weaken the foundation of a building, or collapse a street or sidewalk. Contamination is also a big safety concern. Most of the time the water is going from the pipe to the outside, but there are times when the pressure changes and the water flows outside, mixes with whatever is around the pipe, and then flows back in,” he says.

*****************

It’s hard to predict whether or not full automation will happen in our lifetimes, but it’s not a stretch to say that here in MechE, we get a little bit closer every day. We can see the benefits to humankind at each step, from prevention to response, and we wait for the day when robots become dreamers themselves.